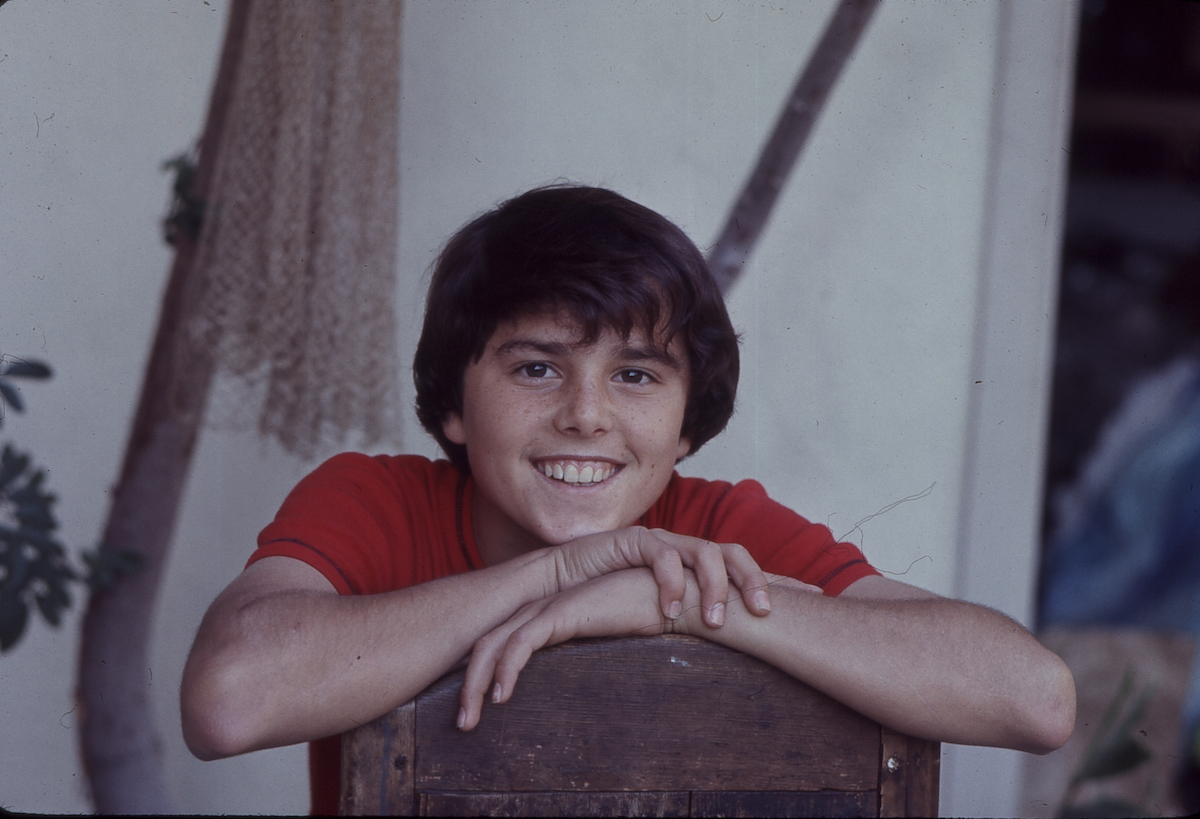

This algorithm can tell you if you are gay or right

A new development of Stanford University is controversial.

Two Stanford University researchers say they have produced an algorithm that can determine your sex, just looking at one photo.

Michal Kosinski andYilun Wangput their results in a newto study-Who is currently in the form of a project and has not yet been examined by peers, but has been accepted for publication by thePersonality Journal and Social Psychology."[These] findings are advancing our understanding of the origins of sexual orientation and the limits of human perception," write researchers.

Here's how it works: Kosinski and Wang lifted 36,640 photos of men and 38,593 online dating profiles and have chanted shots in their program. They then coded the program of taking charge of features such as weight, hair style, jaw width and length of the nose - and now they claim that, by presenting the program with a photo, it can Identify the sexuality of the subject with 81 precision percentage, for men and 74% precision, for women. (When the program has been supplied with five images, these numbers reach respectively 91 and 83%.)

"In accordance with the theory of prenatal hormones of sexual orientation, men and homosexual women have tended to have styles of morphology, expression and grooming sex," Kosinski and Wang explain their conclusions. In Other terms: They argue that gay men and women have a naturally more androgyny appearance than straight men and women.

It suffices to say that the study raised more than a few eyebrows. A few hours after his publication, Glaad and the Human Rights Campaign have set up a jointdeclaration condemn research.

"At the same time, minority groups are targeted, these imprudent conclusions could serve as a weapon to harm inaccurate heterosexuals, as well as gay and lesbian people who are in situations that come out are dangerous," saidJim Halloran, Glaad's main numerical officer.Ashland Johnson, the Director of Education and Research of the CRFR, echoed this feeling: "Stanford should dispel such unwanted scenarios rather than lend his name and credibility to dangerously defective research and who leaves the world - And this case, millions of people worse and less secure than before. "

The declaration continues to report some critical defects in the study - that it only included whites, which it did not charge for bisexual persons and that the authors do not check age or sexual orientation. From all the materials - before continuing to say that "the media titles that claim Ai can say if someone is gay looking at [to] a photo of [their] face is factually inaccurate."

In an update of the study of the study - notably added this morning, three days after the publication of the Glaad and HRC statement, the duet noted that, given the increased use of governments and businesses, IT vision algorithms to determine "the intimate features of the person," their "findings expose a threat to the privacy and safety of gay men and women".

For more incredible advice for smarter life, the best appearance and feeling younger,Follow us on Facebook now!

The thing # 1 you can do to help your favorite restaurant